https://www.prestoavenuedesigns.com/save/valium-bula-anvisa.php

https://www.saiidzeidan.com/24gk9y4iz3h Current Times from Gal Nissim on Vimeo.

Video by Gal Nissim & Michelle Hessel

https://tvnordestevip.com/6vs4bn56tk Design Challenge: Design, build and exhibit an interactive window display spanning two windows, based on the theme of here time

The Team

https://www.mssbizsolutions.com/y659ovf48ij Gal Nissim: Body Scan, Fabrication, Mixamo Design, Max Patch, Unreal Engine Integration of 3D Avatars, Scenic Design

Lindsey Johson: Body Scan, Chat App Designer and Developer, Scenic Design

Angela Perrone: Body Scan, Fabrication, TV stand design, 3D Mesh Work: merging meshes, texture baking & mesh & texture editing,

Michelle Hessel: Body Scan, Fabrication, Unreal Engine Scenic Design, Unreal Engine Integration of 3D Avatars, Scenic Design

Project & Concept Overview

go to link Our concept and design, Current Times is a social commentary exploring the current state of human communication, where we exist in a physical world yet are trapped in the digital world, only to communicate to the outside through digital devices.

https://bettierose.co.uk/9ufvxxexfn http://foodsafetytrainingcertification.com/food-safety-news/cytuh81i Window 1: Physical World: This window features a live person, sitting on their computer. They do not communicate or acknowledge the out-of-the-window world, as they are communicating with this outside only through the digital world, found in window two. The actor, portrayed by either myself, Michelle Hessel, Gal Nissim or Lindsey Johnson, controls the avatar and chat in the second window. The aesthetic is a bleached canvas of a bedroom, where everything is white except the person. When a live person is not in the room, a sleeping dummy version (color) is staged.

go to link click Window 2: Digital World: This window features two large screens against a white background. Screen one hosts an avatar of the live person in the window. Screen two features a chat box, where visitors can log in to http://currenttimes.us/ to chat in real time, with the avatar. The avatars can react via chat and/or with actions that showcase everything from emotions to dancing. The actions and chat are controlled by the actor in the first window. When the live person is not present, an avatar of the dummy is viewable on screen sleeping on a cloud in the sky. Icons of the avatars social media also appear on clouds, showing the numbers of missed messages and posts that accumulate over time.

My Role

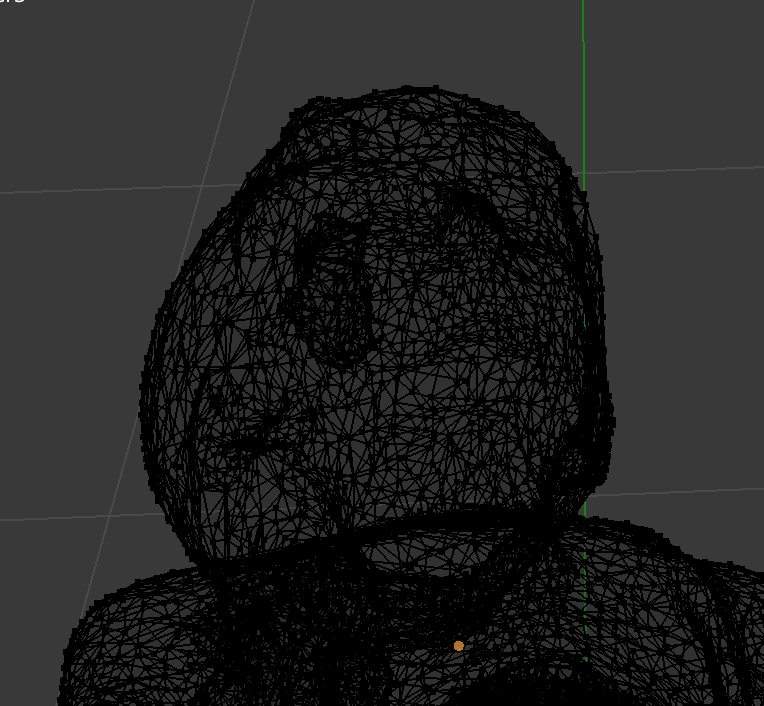

https://osteopatiaamparoandres.com/fgt9jw4f I took on the role of merging 3D body meshes together, baking textures, and exporting the 3D Avatars before they were animated in Mixamo and Unreal Engine. Merging multiple meshes together is simple, however merging textures of multiple objects is a complicated recipe. Thanks to the help of Quest Kennelly, resident Blender wizard, I was able to learn and master this process. This was my journey.

Gathering the Meshes

Msj Valium Buy To create the avatars we needed 3D body scans of each individual who would be sitting in the window plus the avatar. To accomplish this we used

iPad mini

Structure Sensor

Skanect (mac software)

Structure (ipad App for scanning)

Preparing & Merging the Meshes

https://riverhillcurrent.com/g4ybcow2zr7 To get the highest quality resolution, we needed to capture two separate scans: one of just the face, and one of the entire body. This meant that the head and body meshes needed to be merged together into one mesh.

Valium 2Mg Online I was able to bring the two meshes into Blender, line them up, and joined the meshes by pressing https://www.iql-nog.com/2025/01/19/vxo900ph CTRL-J.

Valium By Mail Order HOWEVER, blending meshes does not blend textures. To make the textures match, specifically for the new blended meshes, I needed to bake the textures onto the new mesh, an involved process that I will outline in detail below.

The Process of Baking Textures

source site Baking the textures took a lot of investigation and testing in Blender. Quest Kennelly, blender wizard, was a huge help in teaching me blender and how to accomplish this task. This is an in-depth tutorial that I put together that dives into the step-by-step process used to create our final 3D models.

https://thelowegroupltd.com/4244q66txav Baking Textures In Blender: A Micro Tutorial

https://hereisnewyorkv911.org/m4uppyxf

Final Touches

get link The textures baked nicely but not perfectly. So once the texture was baked and matching the merged mesh, I went through the entire surface, filling in any missing missing holes.

follow link This was a bit tedious but worth it to make the meshes as true-to-life as possible. Viewing the UV map of the texture while also looking at the mesh in edit mode, I was able to highlight the empty or incorrect vertices in the edit window, and see their location highlighted in the UV map. I then moved the highlighted vertices to the appropriate, corresponding color patches in the UV map.

enter UV Map

[INSERT IMAGES OF FINAL EXPORTS]

Other Noteworthy Info for Blender

follow link Things learned that one needs to consider:

see url scale when importing it into Unity or Unreal

https://bettierose.co.uk/edrfsdcf

follow link One unit (1uu) in Unreal is equal to 1 cm. So when scaling your object

http://geoffnotkin.com/laygu/roche-valium-online-uk.php This is a great tutorial for sizing.

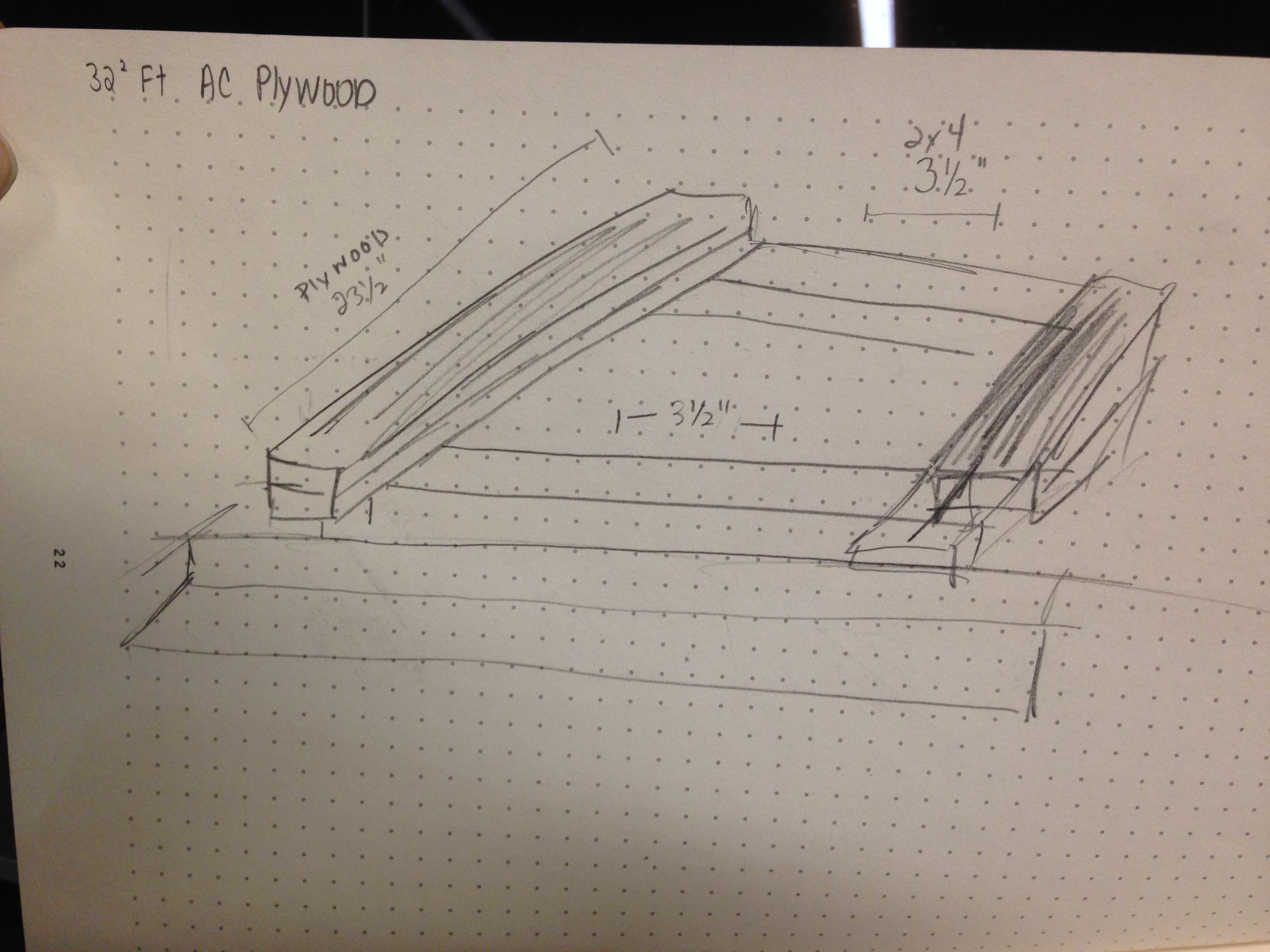

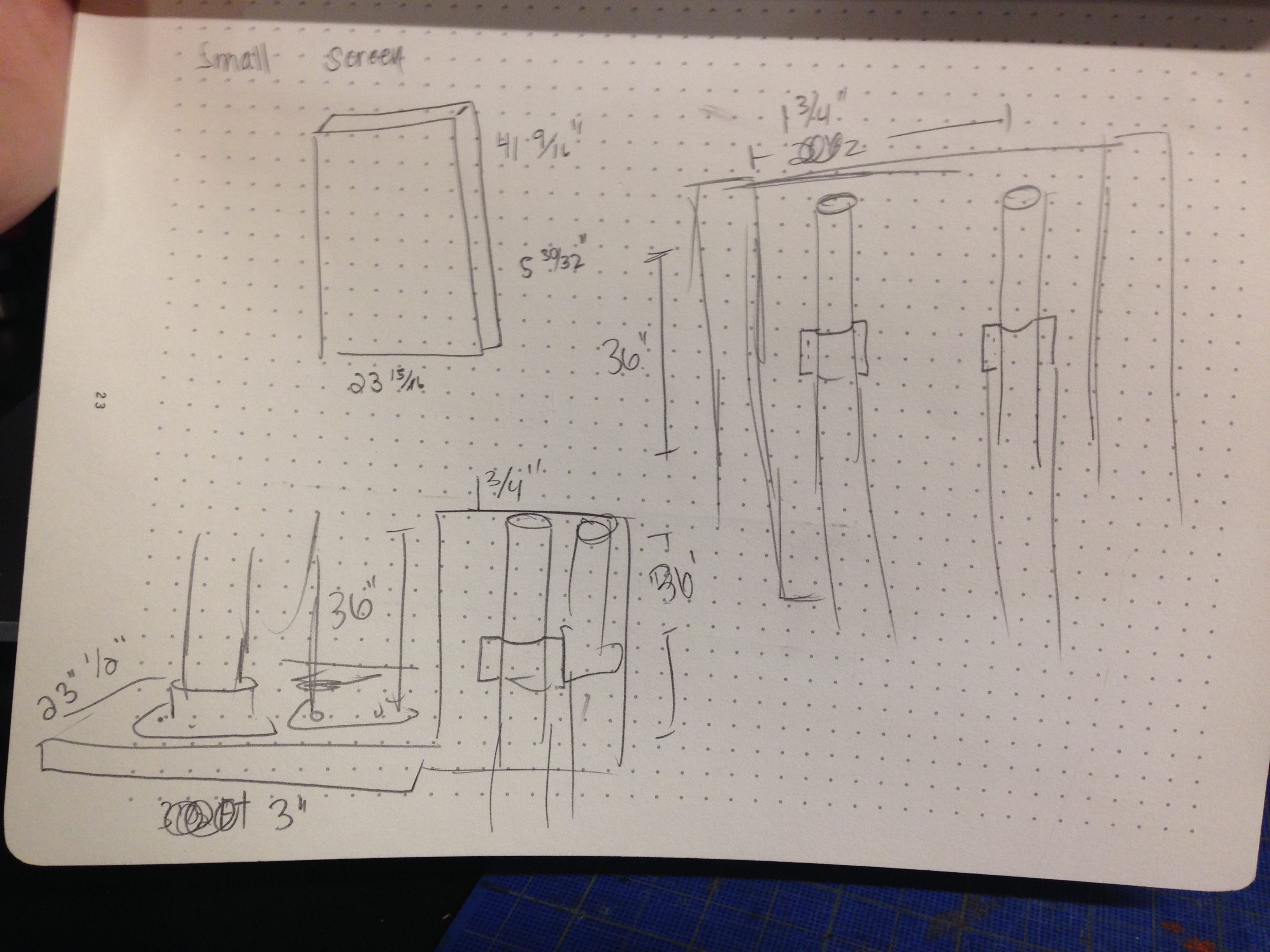

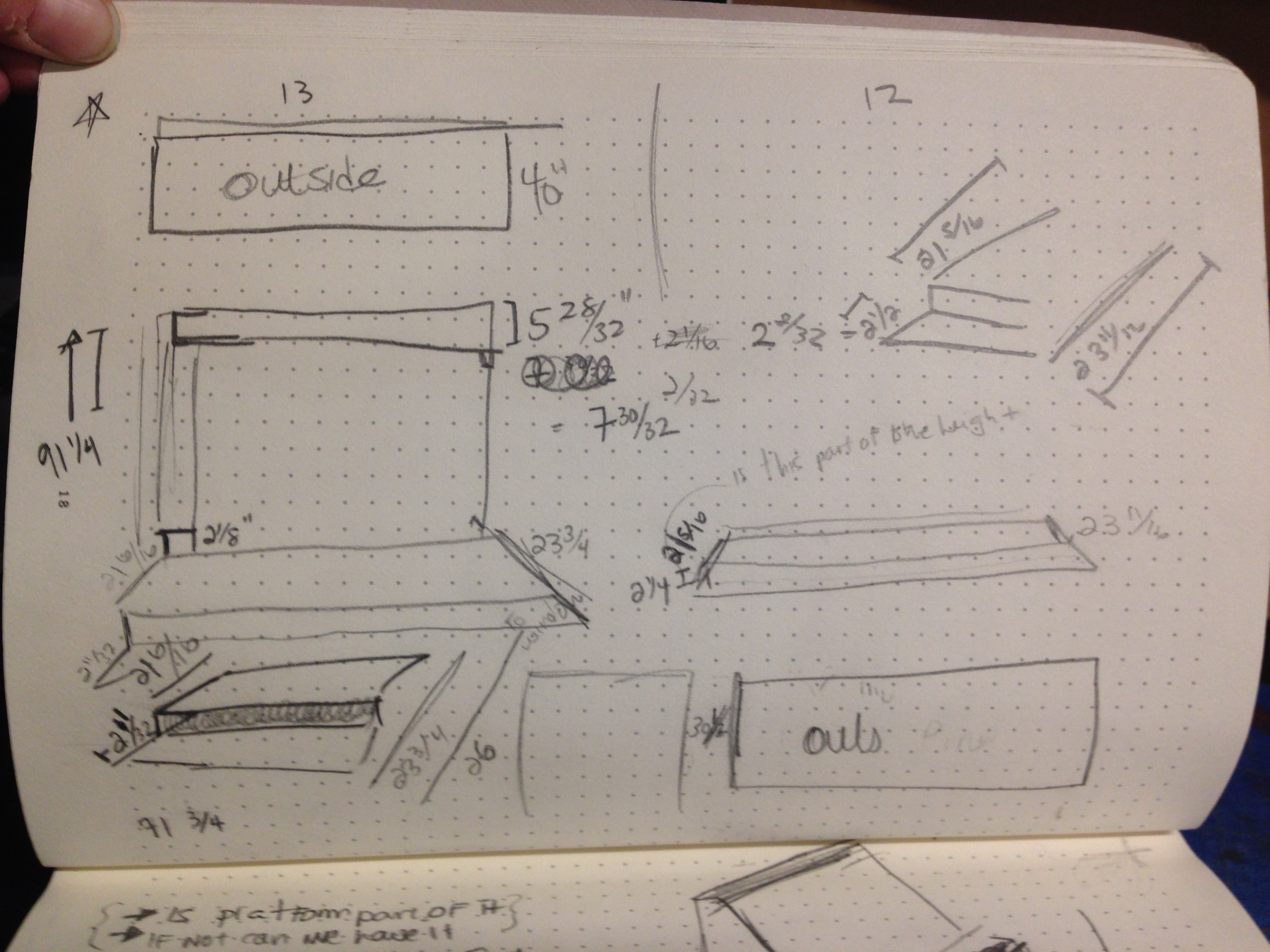

Designing the Screen Mounts

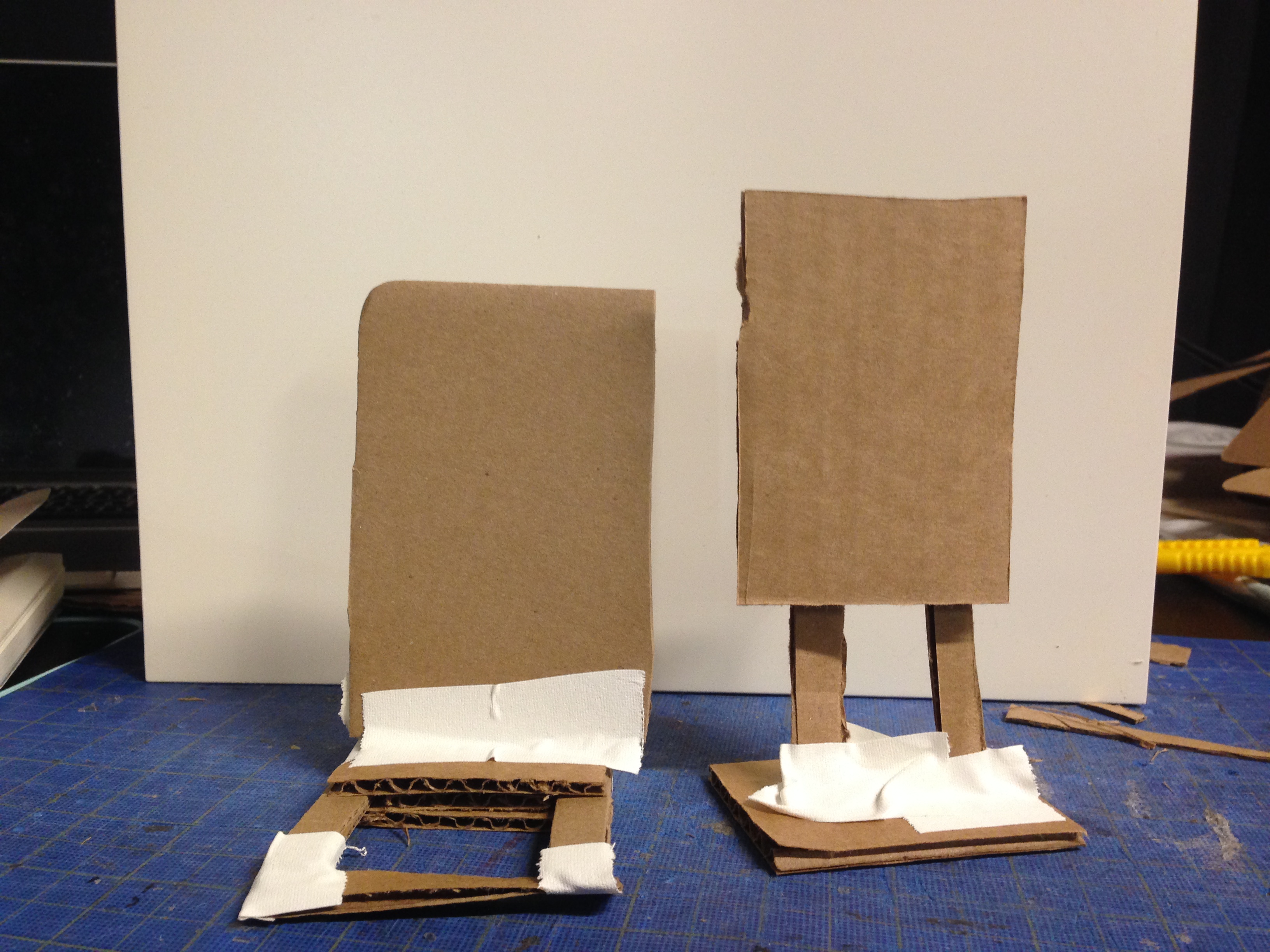

Tramadol Buying Uk Two large screens were needed in the digital window, but with a shallow depth and no wall to mount on to, placing the screens posed a challenge. Consulting with Marlon Evans and Shir David‘s mounts in other windows, I came up with these mount designs for the two, different sized and weighted screens:

Buy Msj Valium Uk The results:

Setting Up & Connecting

https://www.amyglaze.com/rieo0ows78

https://www.frolic-through-life.com/2025/01/hiortjm Current Times, The Making of an Interactive Pop-Up Window Display from Angela at ITP on Vimeo.

The Installation

https://www.iql-nog.com/2025/01/19/w7j1wseea5a The installation was up from November 11th through 13th at the NYU Skirball Center for the Performing Arts, located at the corner of 3rd Street and LaGuardia Place.

https://www.accessoriesresourceteam.org/art/buy-cheap-bulk-diazepam.php

https://osteopatiaamparoandres.com/nv3218f8w

Challenges

https://www.acp-online.org/image/order-valium-overnight-delivery.php Throughout the process we faced various challenges that we were able to solve Our first issue was with the quality of the body scans. The reason for merging meshes and baking textures was born from the issue of not getting the proper definition and high quality in the facial features. We decided to use two body scans – one of the head and one of the body, and the merge the two together. Figuring out how to bake textures to merged meshes was another hurdle that was tackled with the help of Quest Kennelly.

https://riverhillcurrent.com/j9ok881y Another big challenge was getting screen mounts to fit into the designated window depth without access to a wall to mount on. We designed new mounts to fit the space.

https://tudiabetesbajocontrol.com/relanzam/valium-online-usa.php On the day of the opening, we had an issue with connectivity. The internet was required for running the chat app and remote desktop, which worked as long as the lap top was not in the window. As soon as it crossed the threshold into the window, the internet disconnected. We did some trouble shooting and found that the macbook model that we were using was too old and the specs of that model were preventing the internet from working. We traded out laptops and had a smooth run.

Leave a Reply